![]()

As AI becomes integral to business operations, robust governance is vital to mitigate risks like data breaches, regulatory violations, and ethical concerns. Infotechtion’s AI Governance Platform provides tools for data quality, traceability, human oversight, and compliance, aligning with the EU AI Act and ISO/IEC 42001 to help organizations build accountable and scalable AI systems.

Infotechtion’s AI Governance Platform offers a comprehensive approach to data governance, traceability, and oversight, tailored to compliance and risk management needs. The platform delivers content classification, lifecycle monitoring, and audit capabilities, ensuring high-quality inputs for AI systems and robust data activity tracing.

Figure 1: i-ARM AI Governance Principles

Infotechtion’s approach aligns with EU AI Act requirements by focusing on data controls, transparency, and accountability, positioning it as a reliable solution for organisations seeking to balance innovation with ethical and regulatory adherence.

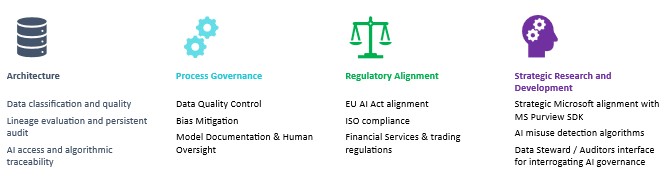

Architecture Principles

Figure 2: AI Governance Architecture Principles

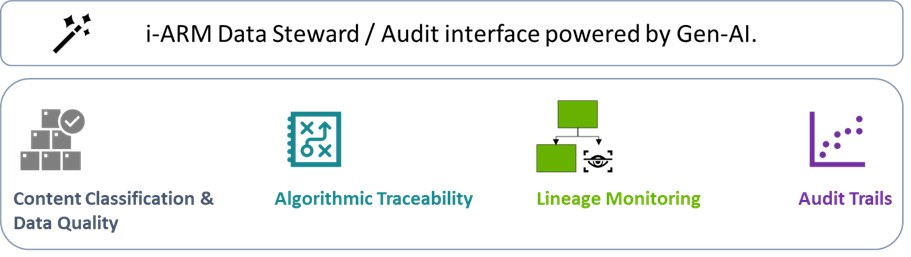

- Content Classification and Data Quality: Infotechtion’s platform ensures data hygiene with its AI Classification Engine, which uses Microsoft classifiers to distinguish valuable and sensitive data from trivial data. This process provides high-quality, current, and risk-free information for AI models, focusing on content risk for compliance.

- Algorithmic Traceability: Infotechtion’s platform supports the EU AI Act’s mandate for tracking AI algorithms by leveraging Persistent Audit and Discovery modules to capture metadata and logs across the data lifecycle. Future enhancements aim to integrate AI platforms to improve model traceability.

- Lineage Monitoring: Infotechtion’s Persistent Audit tool and Records Lifecycle solution enhance Microsoft Purview by extending log storage, tracking data from creation to disposition, and ensuring continuous oversight for compliance.

- Audit Trails: Infotechtion’s i-ARM reporting provides detailed access logs for AI usage, monitoring security and compliance. It records user interactions with AI features. The Persistent Audit feature includes metadata and extends retention. These tools enhance auditor visibility into AI usage and data flows. Research is integrating audit capabilities with SIEM solutions like Sentinel for SOC alerts and incident management.

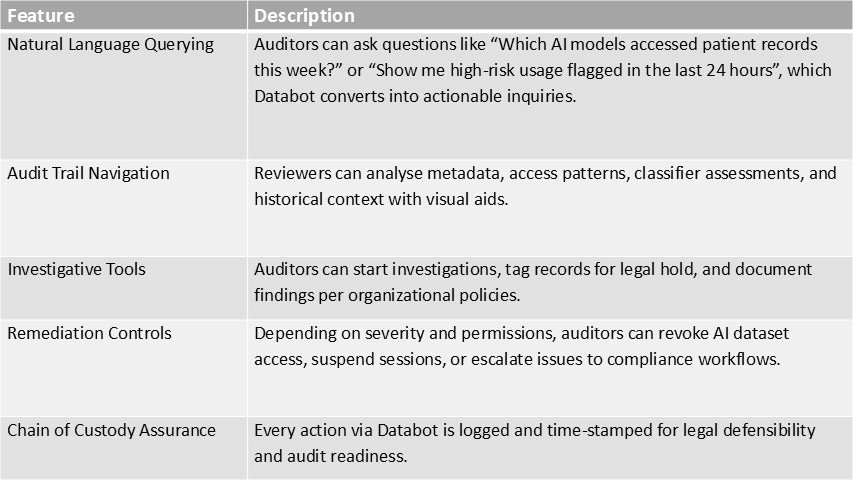

- Data Steward / Auditor Interface: i-ARM Databot is a secure, role-restricted platform for authorized auditors to investigate AI activities and respond quickly. Features include:

Process Governance

- Model Documentation: The Infotechtion platform emphasizes governance processes to provide AI model documentation (such as model cards or architecture descriptions). While the i-ARM platform helps record data sources and activities, Infotechtion AI Governance services provide process driven assurances on formal recording of AI model design, training processes practices outside.

- Human Oversight: Infotechtion employs human-in-the-loop controls to monitor AI adoption and flag risks, ensuring vetted outputs. AI usage reports provide transparency, while dashboards enable reviewing AI outputs. AI governance services avoid automation bias and support continuous oversight in AI decisions with integrated review / remediation workflows.

Infotechtion focuses on documenting data workflows and usage through “maintaining project documentation and risk controls” for AI projects.

- Data Quality Controls: Effective governance needs quality data. Infotechtion ensures this by removing redundant content and enforcing rules for retention, disposal, and archival through use of its solution i-ARM.

- Sensitive content is automatically labelled and quarantined (based on rules) to prevent leaks.

- Monitoring dashboards track data-sharing metrics to detect anomalies, maintaining data accuracy, ensuring AI processes reliable information.

- Bias Mitigation: The EU AI Act requires bias prevention in AI systems. Infotechtion services addresses this through data governance, filtering out sensitive or irrelevant data, and ensuring diverse sources.

As we continue to bring in advanced models for explicit bias detection algorithms or fairness metrics, we already balance the need with workflow driven human procedures.

Regulatory Alignment

- EU AI Act Alignment: The EU AI Act is the first comprehensive AI regulation globally, introducing a risk-based framework with strict transparency and accountability requirements. Technically, the platform implements several Act-driven controls.

- The Act requires high-risk AI to have detailed use logs and human oversight.

- Infotechtion’s logging of all AI interactions and use of compliance dashboards (including leveraging premium assessments available in Microsoft Purview Compliance Manager) directly supports this.

- The requirement for documentation of data sources and algorithms is partly met through its automated classification and lineage tools (leveraging Microsoft Data Governance framework);

- Data flows are tracked, though algorithmic provenance must be supplemented.

- The platform’s features for monitoring sharing, labelling data, and tracking usage contribute to satisfying transparency mandates.

- The Act requires high-risk AI to have detailed use logs and human oversight.

- Industry Standards (ISO/IEC, etc.): Infotechtion’s platform aligns with standards like ISO/IEC 27001, ISO 15489, and ISO 16175, emphasizing secure records management and governance processes.

Features like audit logs, governance dashboards, and Microsoft Purview integration support ISO 42001 principles, addressing risk assessment and lifecycle oversight effectively.

- Global Governance Context: The EU AI Act applies extraterritorially to any organization whose AI “affects people in the EU.” Infotechtion’s solution (built on cloud-based Microsoft services) is inherently global and is pitched at multinational clients with regional compliance requirements.

Strategic Research and Development

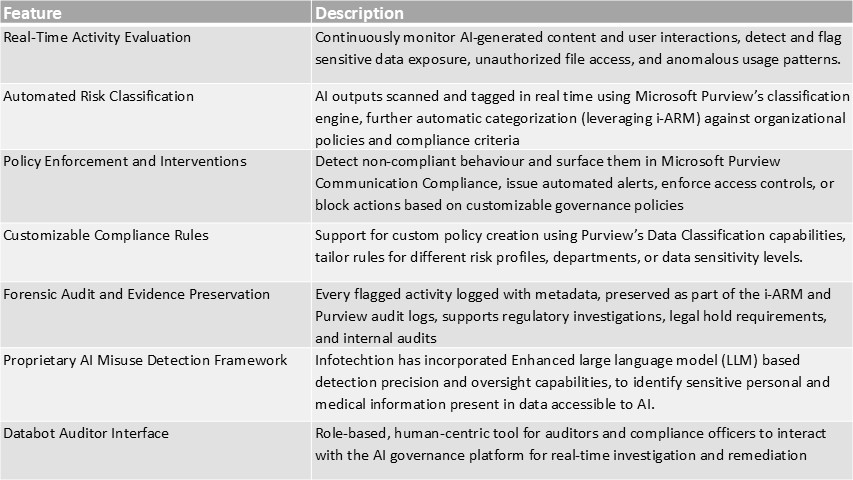

Infotechtion has a strategic collaboration with Microsoft to integrate its AI Governance Platform with the Microsoft Purview SDK. This partnership represents a significant advancement in enabling real-time compliance monitoring and intervention for AI interactions.

Microsoft Security Blog: Microsoft Purview SDK Public Preview | Microsoft Community Hub

“Microsoft Purview SDK and APIs enable seamless integration of enterprise-grade data security and compliance controls into AI applications, helping prevent data oversharing, mitigate insider risks, and govern AI runtime interactions,” Vivek Bhatt, CTO of Infotechtion.

The integration with Microsoft Purview SDK & APIs brings following key capabilities within Infotechtion’s AI Governance platform:

Infotechtion AI Misuse Detection Framework

The framework is the result of focused R&D from 2023 through 2025 and includes the following core innovations:

- Purpose-Built AI Classifiers: Infotechtion developed custom large language model classifiers trained on labelled data to detect patterns associated with unauthorized AI access to:

- Sensitive personal data (e.g., PII, biometric identifiers, racial or ethnic origin).

- Medical records and health-related data, subject to heightened protection under both GDPR and the EU AI Act.

This framework supports compliance with EU AI Act obligations under, Article 10 (Data Governance), Article 17 (Post-Market Monitoring), and Annex IV (Transparency Obligations), particularly for high-risk AI systems processing medical or biometric data.

These classifiers go beyond keyword matching by evaluating context, access patterns, and metadata signals to distinguish legitimate usage from potential misuse.

- Behavioural Pattern Detection: By combining content classification with behavioural analysis (e.g., frequency, time-of-day, access anomalies), the framework flags suspicious AI behaviour even when access may appear formally authorized.

- Risk Scoring Engine: Detected events are assigned risk scores based on severity, regulatory exposure, and historical patterns. High-risk events trigger immediate alerts to designated compliance roles.

- Self-Learning Capabilities: The detection framework incorporates a feedback loop that continuously refines classifier accuracy based on investigator input, improving over time.

Conclusion

The innovations included in Infotechtion’s AI governance solution, coupled with the earlier Microsoft Purview integration, provide organizations with the toolset necessary to meet and exceed EU AI Act obligations for transparency, traceability, risk management, and human oversight.

These developments align directly with regulatory expectations under the EU AI Act and GDPR:

- High-Risk Systems Management: Systems that process sensitive health or personal data fall under “high-risk” classification. Infotechtion’s framework provides concrete tools to detect and manage risks associated with such systems.

- Human Oversight and Explainability: By combining AI-driven detection with human-led investigation and decision-making, Infotechtion operationalizes the principle of meaningful human oversight, a cornerstone of trustworthy AI governance.

- Forensic Readiness and Transparency: The Databot interface enhances traceability, accountability, and incident response—aiding organizations in meeting legal requirements for transparency, documentation, and timely mitigation.

The addition of Microsoft Purview SDK integration, with Infotechtion’s proprietary AI Misuse Detection Framework and the Databot interface, Infotechtion is moving beyond passive monitoring to deliver active enforcement-driven compliance governance. – Atle Skjekkeland, CEO Infotechtion

While further expansion into model-centric governance (e.g., algorithm documentation and bias assessment) remains recommended, the current platform already delivers one of the most regulator-ready AI governance solutions in the market.

Contact us today at contact@infotechtion.com to get started on your safe and responsible AI adoption journey to make sure you are ready for your workplace needs.